Offscreen Effect Player Demo App

This document describes the legacy technology of the Banuba SDK. In the future, support for OffscreenEffectPlayer will be dropped completely. Use the PlayerAPI instead.

Requirements

- Latest stable Android Studio

- Latest Gradle plugin

- Latest NDK

Get the client token

To start working with the Banuba SDK Demo project for Android, you need to have the client token. To receive the trial client token please fill in our form on banuba.com website, or contact us via info@banuba.com.

Before building the Android Offscreen Effect Player Demo project, place your client token inside an appropriate file in the following location:

src/offscreen/src/main/java/com/banuba/offscreen/app/BanubaClientToken.java

Client token usage example

final class BanubaClientToken {

public static final String KEY = "YOUR_TOKEN_HERE";

private BanubaClientToken() {

}

}

Get the Banuba SDK archive

With the client token, you will also receive the Banuba SDK archive for Android which contains:

- Banuba Effect Player (compiled Android library project with .aar extension),

- Android project folder (src) which contains demo apps, located in

src/appandsrc/offscreenrespectively.

Build the Banuba SDK OEP Demo app

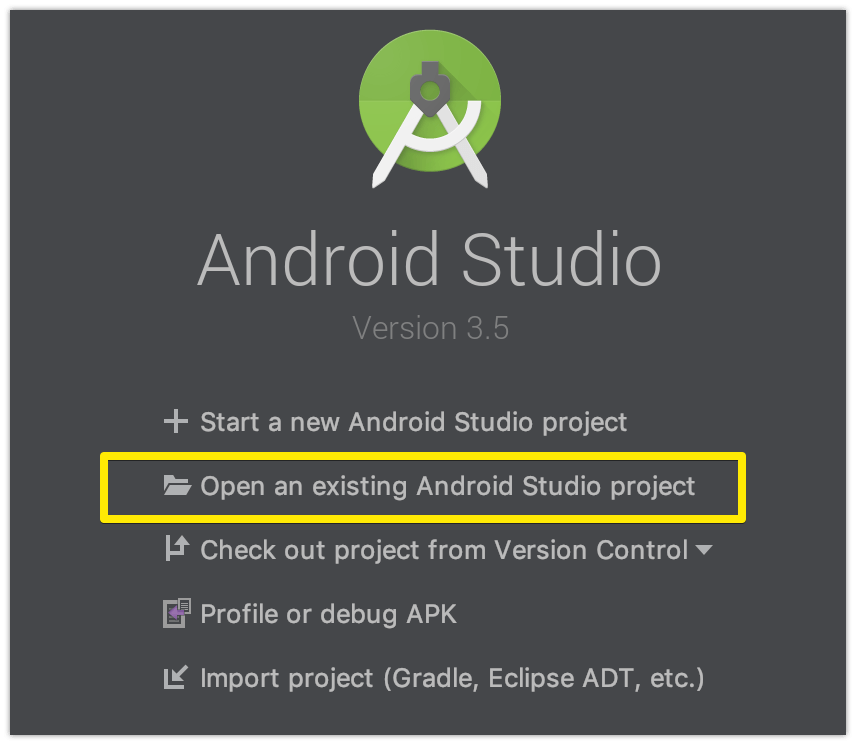

- Import the Android project under

srcfolder in Android Studio.

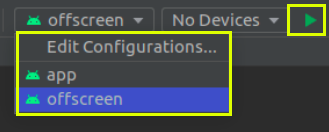

- Select the needed application for the build.

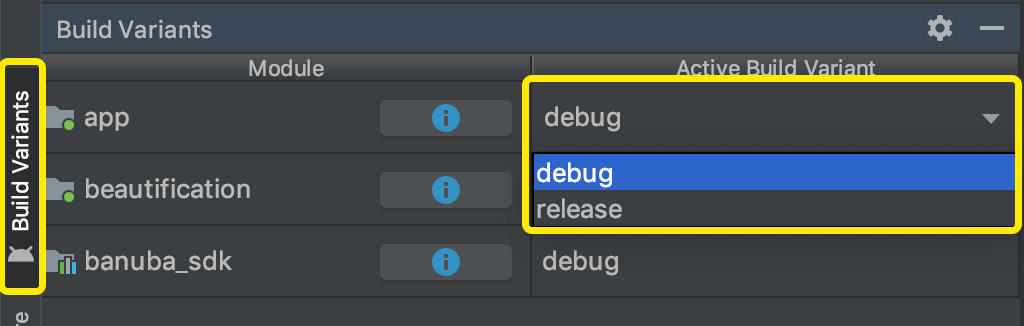

- Select Build Variant from the left side menu in Android Studio.

Debugbuild variant allows to properly debug and profile app during its execution.Releasebuild variant allows testing release variant of the application for faster performance.

Offscreen Effect Player Demo App briefly

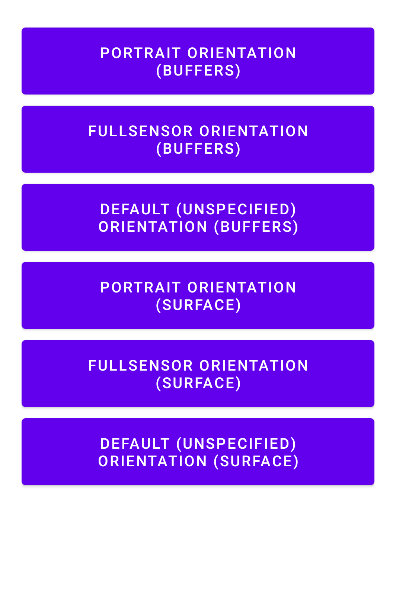

The application shows different conveyors for image processing when the active effect is rendered to the buffer in the memory. Additionally, it shows how to configure input image orientation for processing in the different device orientations and video frame sources.

SURFACE mode buttons in the picture above reflect the usage of Offscreen Effect Player with Surface texture.

Offscreen Effect Player Demo app contains the following packages:

camera— the camera capture functionality. SeeCamera2Simple,Camera2SimpleThreadandCameraWrapper.fragments— the fragment configuration functionality.gl— the needed Open GL ES configuration functionality.orientation— the device orientation change observing functionality.render— the rendering purpose functionality. SeeCameraRenderSurfaceTextureandCameraRenderYUVBuffers.

Offscreen Effect Player notes

The

BanubaSDKManageris not used in the case of OEP. Instead, use theOffscreenEffectPlayerclass. You can use only one static methodBanubaSdkManager.initialize(Context, BNB_KEY)to initialize Banuba SDK with the token. See theCameraFragment.javaclass from the Offscreen Demo app. There are two cases of Banuba SDK initialization in the case of OEP: usingEffectPlayerConfigurationorOffscreenEffectPlayerConfig:// NOTE: for initializing the EffectPlayer inside the Offscreen Effect Player uncomment the next code block

/*final OffscreenEffectPlayerConfig config =

OffscreenEffectPlayerConfig.newBuilder(SIZE, mBuffersQueue).build();

mOffscreenEffectPlayer = new OffscreenEffectPlayer(mContext, config, BNB_KEY);

*/

BanubaSdkManager.initialize(mContext, BNB_KEY);

mEffectPlayer = Objects.requireNonNull(EffectPlayer.create(config));

mOffscreenEffectPlayer = new OffscreenEffectPlayer(

mContext, mEffectPlayer, SIZE, OffscreenSimpleConfig.newBuilder(mBuffersQueue).build()

);

As you can see in the above source code, the mEffectPlayer object is used as an input parameter for the OffscreenEffectPlayer constructor. In this case, OEP is initialized with pre-created EffectPlayer. You can assign more listeners related to EffectPlayer.

The OEP is included in the banuba_sdk module that is available with sources, and you can see the structure and architecture of the OEP. The main idea is that OEP is not responsible for camera frame acquiring. OEP just processes an input image and returns the processed image synchronously either as a buffer (see the OffscreenEffectPlayer.setImageProcessListener) or draw a processed image on the texture (see the OffscreenEffectPlayer.setSurfaceTexture).

The Offscreen effect player will create a rendering surface taking the min value of SIZE dimensions as an OEP surface width and the max value of SIZE as a surface height. In the sample above we have surface size 720x1280 (width, height correspondingly).

The surface size determines the effective area for OEP's rendering. So if the surface size is 720x1280 (width, height correspondingly) the surface will have the following look:

If UI changes orientation with a size of 1280x720, the OEP surface will have the following look:

The rendering surface size of OEP determines the size of the output image.

Offscreen Effect Player automatically manages the surface size if an application changes UI orientation.

For processing input camera frames, use methods

OffscreenEffectPlayer.processImageorOffscreenEffectPlayer.processFullImageData. The processed image you can get viaImageProcessedListener.onImageProcessed(see theOffscreenEffectPlayer.setImageProcessListenermethod) in the case of processed image buffer. Or the OEP can draw processed image on the texture (see theOffscreenEffectPlayer.setSurfaceTexture). Next methods should not be used with OEP:BanubaSDKManager.attachSurface,BanubaSDKManager.effectPlayerPlay,BanubaSDKManager.startForwardingFramesand other methods ofBanubaSDKManagerrelated with the camera frames processing.For loading an effect, use the method

OffscreenEffectPlayer.loadEffectinstead ofBanubaSDKManager.loadEffect.For making

FullImageData: Use theOrientationHelperclass to set the right face orientation based on the camera and the image orientations.

The face orientation parameter ensures the proper performance of the recognition model. The correct image orientation ensures the right image processing by the neural network.

The steps of OrientationHelper usage include:

OrientationHelper.getInstance(context).startDeviceOrientationUpdates(); // starting the OrientationEventListener

... final boolean isRequireMirroring = isFrontCamera; // this parameter should be configured externally and depend on camera facing (front/back)

int deviceOrientationAngle = OrientationHelper.getInstance(context).getDeviceOrientationAngle();

if (!isFrontCamera) {

// this correction is needed for back camera frames correct orientation making because OEP does not know about camera facing at all

if (deviceOrientationAngle == 0) {

deviceOrientationAngle = 180;

} else if (deviceOrientationAngle == 180) {

deviceOrientationAngle = 0;

}

}

final FullImageData.Orientation orientation = OrientationHelper.getFullImageDataOrientation(

videoFrame.getRotation(),

deviceOrientationAngle,

isRequireMirroring);

FullImageData fullImageData = new FullImageData(new Size(width, height), i420Buffer.getDataY(),

i420Buffer.getDataU(), i420Buffer.getDataV(), i420Buffer.getStrideY(),

i420Buffer.getStrideU(), i420Buffer.getStrideV(), 1, 1, 1, orientation);

Where the videoFrame is WebRTC VideoFrame object but it can be any other frame object type. After FullImageData creation it should be passed into OffscreenEffectPlayer.processFullImageData method.

You also can review how the Offscreen Demo app manages the input image orientation: The application's UI orientation is necessary to determine the OEP input image orientation:

mOrientationListener = new FilteredOrientationEventListener(context) {

@MainThread

@Override

public void onOrientationFilteredChanged(int deviceSensorOrientation, int displaySurfaceRotation) {

mCameraHandler.sendOrientationAngles(deviceSensorOrientation, displaySurfaceRotation);

}

};

Based on this information, the input image orientation is calculated:

private ImageOrientation getImageOrientation(boolean isRequireMirroring) {

int rotation = 90 * mDisplaySurfaceRotation;

int deviceOrientationAngle = mDeviceOrientationAngle;

if (mFacing == CameraCharacteristics.LENS_FACING_BACK) {

rotation = 360 - rotation;

if (mDeviceOrientationAngle == 0) {

deviceOrientationAngle = 180;

} else if (mDeviceOrientationAngle == 180) {

deviceOrientationAngle = 0;

}

}

final int imageOrientation = (mSensorOrientation + rotation) % 360;

return ImageOrientation.getForCamera(imageOrientation, deviceOrientationAngle, mDisplaySurfaceRotation, isRequireMirroring);

}

Use the

OffscreenEffectPlayer.callJsMethodmethod instead ofBanubaSDKManager.effectManager.current().callJsMethod. TheOffscreenEffectPlayer.callJsMethodis obsolete useOffscreenEffectPlayer.evalJsmethod instead.The processed image may be returned by OEP via

ImageProcessedListener.onImageProcessedcallback method (see theOffscreenEffectPlayer.setImageProcessListenermethod). Also, OEP may draw the processed image on the texture (see theOffscreenEffectPlayer.setSurfaceTexture). So theIEventCallback.onFrameRenderedcallback is not used in the case of OEP at all. Use theImageProcessedListener.onImageProcessedinstead. See theCameraRenderYUVBuffers.constructHandlerfrom the Offscreen Demo app sources for more details:protected CameraRenderHandler constructHandler() {

mHandler = new CameraRenderHandler(this);

mOffscreenEffectPlayer.setImageProcessListener(result -> {

if (mImageProcessResult != null && mBuffersQueue != null) {

mBuffersQueue.retainBuffer(mImageProcessResult.getBuffer());

}

mImageProcessResult = result;

mHandler.sendDrawFrame();

}, mHandler);

return mHandler;

}If rendering to the texture is not used, the output image orientation will coincide with the input image orientation.

ImageProcessResultbuffer represents the resulted YUV image in the follwoing layout:

where bytes per row for the Y plane is ImageProcessResult.getPixelStride(0);, for U and V planes correspondingly ImageProcessResult.getPixelStride(1); and ImageProcessResult.getPixelStride(2);.

See below the code sample on how to get I420 buffer from the ImageProcessResult:

// result -> this is an ImageProcessResult object in onImageProcessed

final ByteBuffer buffer = result.getBuffer();

mBuffersQueue.retainBuffer(buffer);

JavaI420Buffer I420buffer = JavaI420Buffer.wrap(

result.getWidth(), result.getHeight(),

result.getPlaneBuffer(0), result.getBytesPerRowOfPlane(0), // Y plane

result.getPlaneBuffer(1), result.getBytesPerRowOfPlane(1), // U plane

result.getPlaneBuffer(2), result.getBytesPerRowOfPlane(2), // V plane

() -> {

JniCommon.nativeFreeByteBuffer(buffer);

});